Find previous weeknotes here.

It was a scattered and chaotic week for me, with RC drawing to a close and my attention being demanded from multiple directions at once. But amidst that chaos I wrote some good code, read some interesting articles, and discovered some fresh ideas. Enjoy!

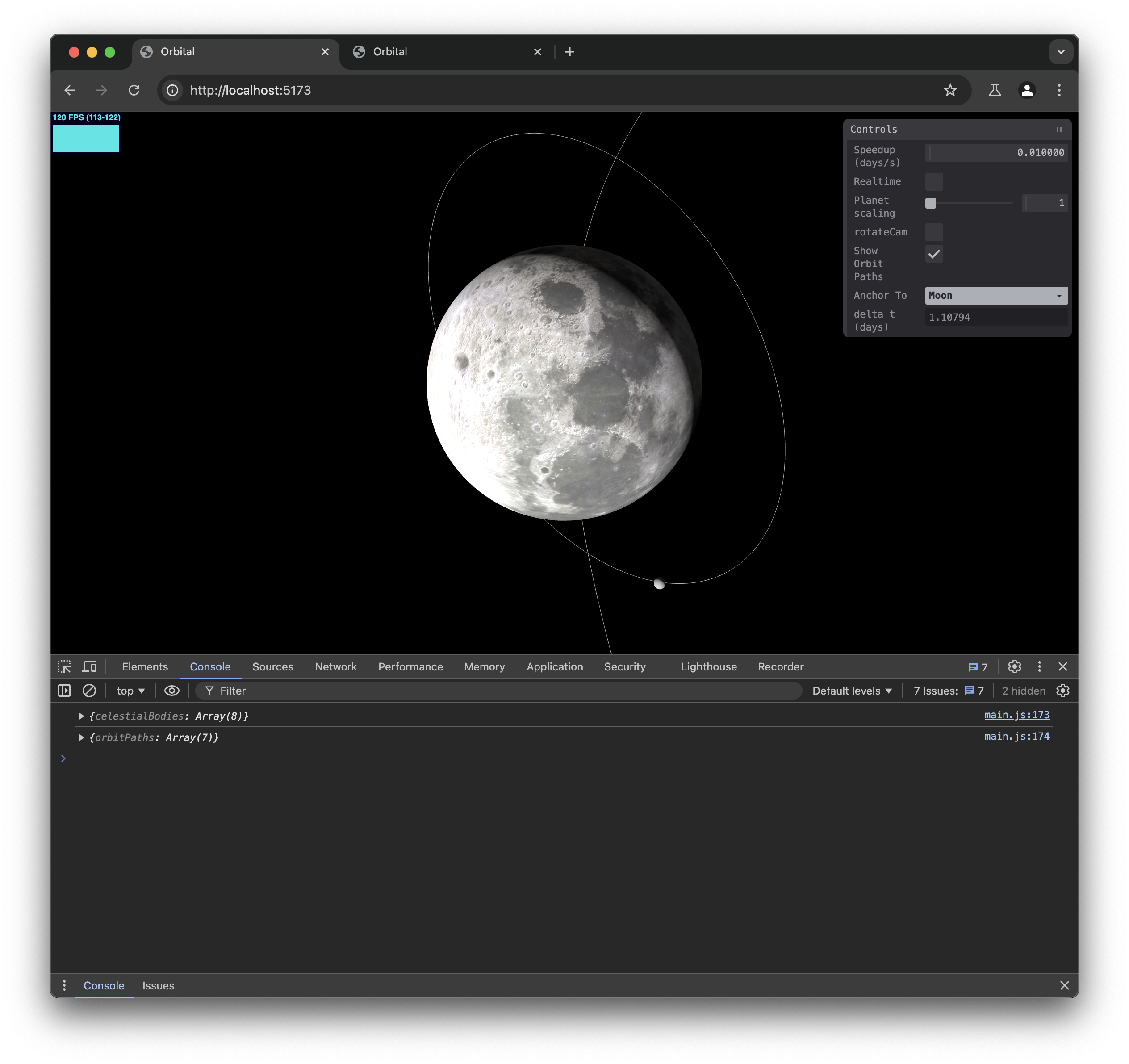

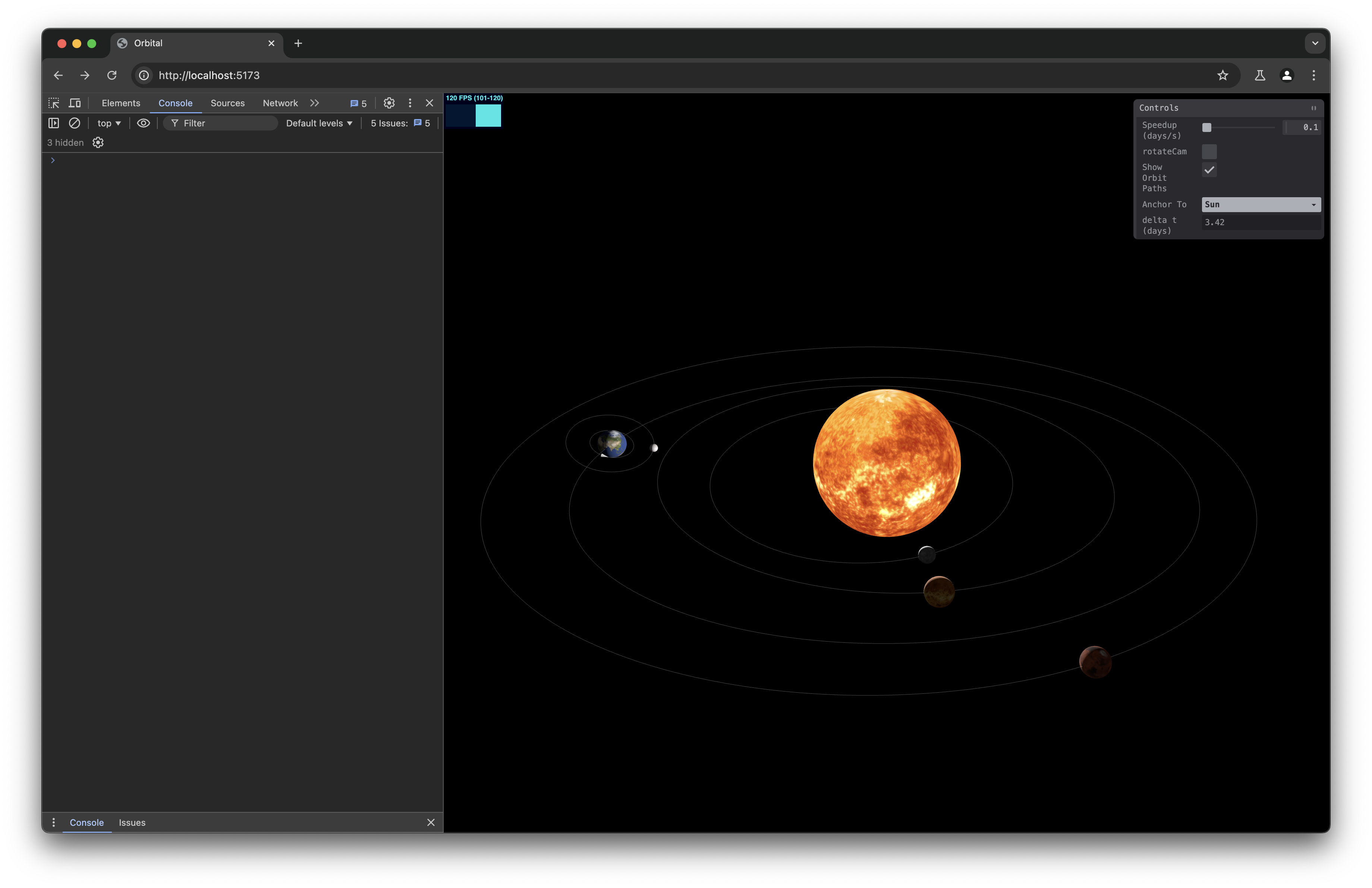

Orbital: A game-jam work in progress

The big event of the week was my second Recurse game jam (I wrote about my first one here).

A few of us set a theme — this time it was Sp00ky — and focussed as much time as we could over 3 days to working on our games, with blitz presentations to the whole of RC on Thursday.

I decided to mostly ignore the theme and double down on the space/orbit simulation I started last week.

You can try the current release live in your browser at gianluca-orbital.vercel.app or see the latest code at github.com/gianlucatruda/orbital

The idea was to evolve it into a full-blown orbital manoeuvres game where you complete puzzles (e.g. get from planet A to planet B) under certain constraints (mission time, delta-v). As it progresses, you’d have to make increasing use of clever gravity assists to achieve the mission within the constraints.

Unfortunately, I did my usual trick of being overly ambitious in my scope and then overloading myself with other commitments. I ended up only really getting around 8 hours of work on the project and ran into a number of bugs as I transitioned to a physically-accurate model.

One of the rabbit holes I fell down was attempting to migrate the physics engine to Rust with WASM bindings. In principle, this isn’t the worst idea, but I was pretty confident I was going to add a ton of complexity to the build and deploy processes. I justified that by it being a good way to work on more Rust, avoid writing vector operations in JS, and try out WebAssembly compilation. But ultimately I realised that the interfacing between Rust-WASM and the JS frontend is a lot of additional work and fiddling that is precisely the wrong thing to be doing under time constraints and a rapidly-evolving experimental project.

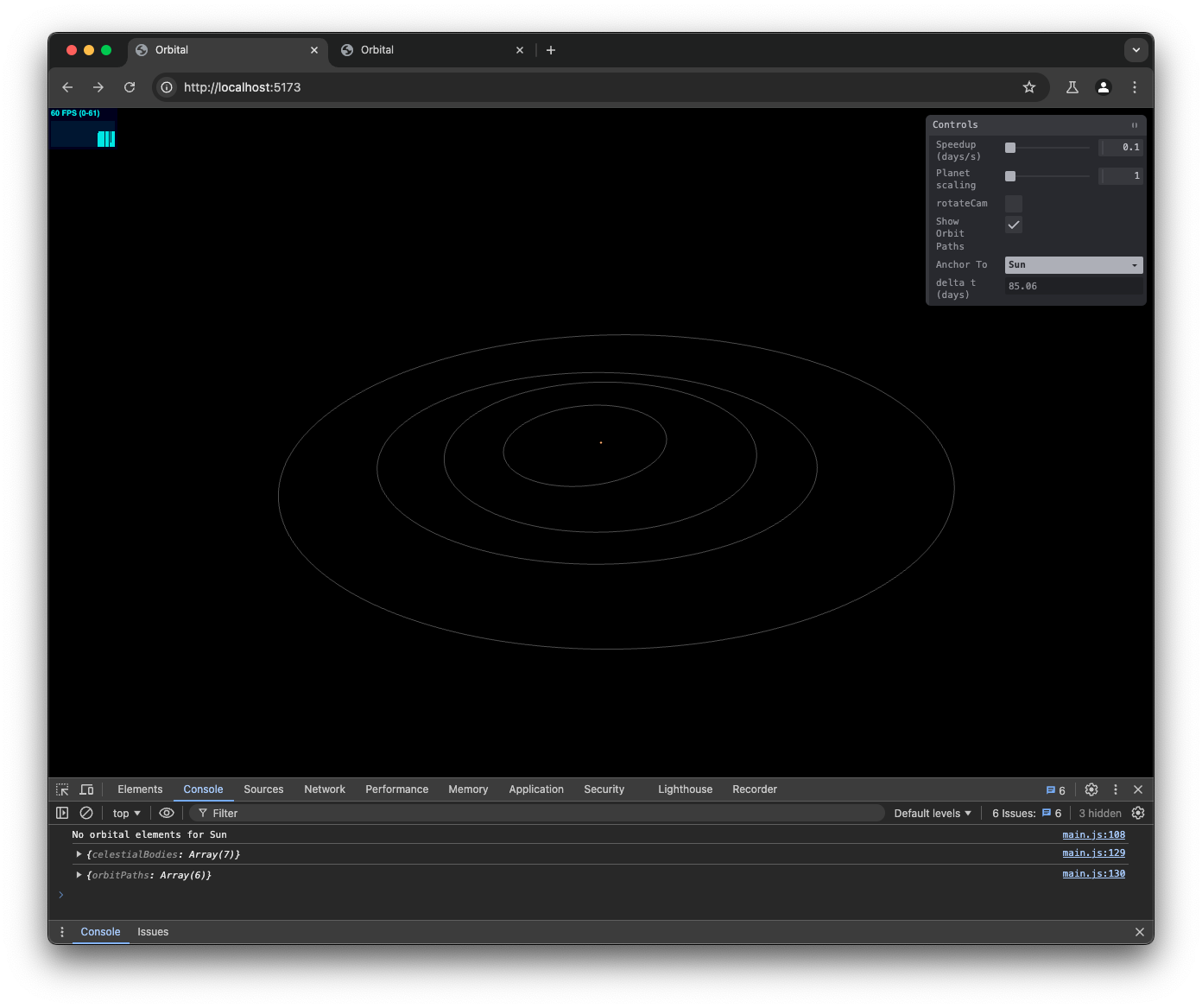

The second rabbit hole was trying to switch from a toy solar system to real-world physical accuracy. I’m still ultimately going this direction, but it introduced a load of additional issues and subtle numerical instability bugs.

The crux is this: the solar system is really big and really empty, but orbits are really fast and really precise. This is not a happy path for 3D scene animation or for 32-bit floats. It’s also tricky to visualise. I’m still working on the fixes in my dev branch.

Ultimately, I didn’t get to implementing any gameplay or even any spacecraft orbital physics (a whole separate system to the Keplerian orbital model used for the stable-orbit planets). But I did have a fun 3D simulation with camera and time controls to present to the rest of RC.

This week’s side quests

As part of adding too many additional constraints to my week, I also:

- Hybrid attended a two-day AI conference.

- Joined the weekly ML Paper Cuts group session where we went deep on the Denoising Diffusion Probabilistic Models (DDPM) paper and classifier-free guidance.

- Completed and reviewed both 2001: A Space Odyssey and Exhalation

- Updated my

.vimrcbased on a nice simple fallback config across iVim on my iPad and various terminals on my Mac and remote Linux machines.

Ideas to hear

Diffusion is autoregression in frequency space

I’ve been riffing on the connections between diffusion models (like DALL.E and midjourney) and autoregressive models (like all the LLMs) as a way to deepen my understanding of both paradigms.

Some rough notes:

- In autoregression, we mask one whole value/token/pixel at each step.

- In denoising diffusion models (DDMs), we mask a bit of all values/tokens/pixels at each step.

- Both autoregression and diffusion are about sequentially subtracting information and having a model learn to sequentially restore the information.

- DDMs are autoregressors, but in the steps of the diffusion process

- Diffusion models are autoregressive, but across noise levels rather than time steps

Then Alexa from RC shared something that nudged this along:

[Diffusion] is a soft version of autoregression in frequency space, or if you want to make it sound fancier, approximate spectral autoregression.

— Diffusion is spectral autoregression

Now we’re cooking! Don’t forget, diffusion models are also evolutionary algorithms.

Accountability sinks

Organisations create accountability sinks to decouple decision-makers from the consequences of their actions. These structures break feedback loops, preventing those affected from influencing decisions or identifying who is responsible.

Here’s an example: a higher up at a hospitality company decides to reduce the size of its cleaning staff, because it improves the numbers on a balance sheet somewhere. Later, you are trying to check into a room, but it’s not ready and the clerk can’t tell you when it will be; they can offer a voucher, but what you need is a room. There’s no one to call to complain, no way to communicate back to that distant leader that they’ve scotched your plans. The accountability is swallowed up into a void, lost forever.

— A Working Library: Accountability Sinks

Chollet’s model of prompt engineering

If a LLM is like a database of millions of vector programs, then a prompt is like a search query in that database […] this “program database” is continuous and interpolative — it’s not a discrete set of programs. This means that a slightly different prompt, like “Lyrically rephrase this text in the style of x” would still have pointed to a very similar location in program space, resulting in a program that would behave pretty closely but not quite identically. […] Prompt engineering is the process of searching through program space to find the program that empirically seems to perform best on your target task.

– How I think about LLM prompt engineering via Simon Willison

Fun links

- A superb article on software engineering and refactoring: Write code that is easy to delete, not easy to extend

- The Apple paper that used clever tricks to see if LLMs can actually reason. Smart experiment design — simple but devastating — and somewhat unsurprising, but notable results. Via this YouTube video.

- A delightful walkthrough of how Arithmetic is an underrated world-modeling technology, featuring chimps, big blocks, and unit algebra. I would have classified this under “Fermi estimates” but the points about unit algebra and the worked examples gives some helpful nuance whether you’ve heard of those before or not.

- Discovered DeskPad: A virtual monitor for screen sharing and installed it with brew. This could be very handy for screencasts and presentations.

- I went on a bit of a deep dive last week on rocket engines:

- How SpaceX reinvented the rocket engine had some brilliant diagrams and a superb explanation that actually clicked for me, covering the (open-cycle, Kerolox) Merlin engines used on Falcon and the transition to (closed-cycle, dual-turbine, Methalox) Raptor engines used on Starship.

- Why does SpaceX use 33 engines while NASA used just 5? tldr; for better reliability and more control over thrust.

- A fun Scott Manley video on the nuclear thermal propulsion rocket engines.

Thanks for reading!