Repo: github.com/gianlucatruda/aimong-us

What it is

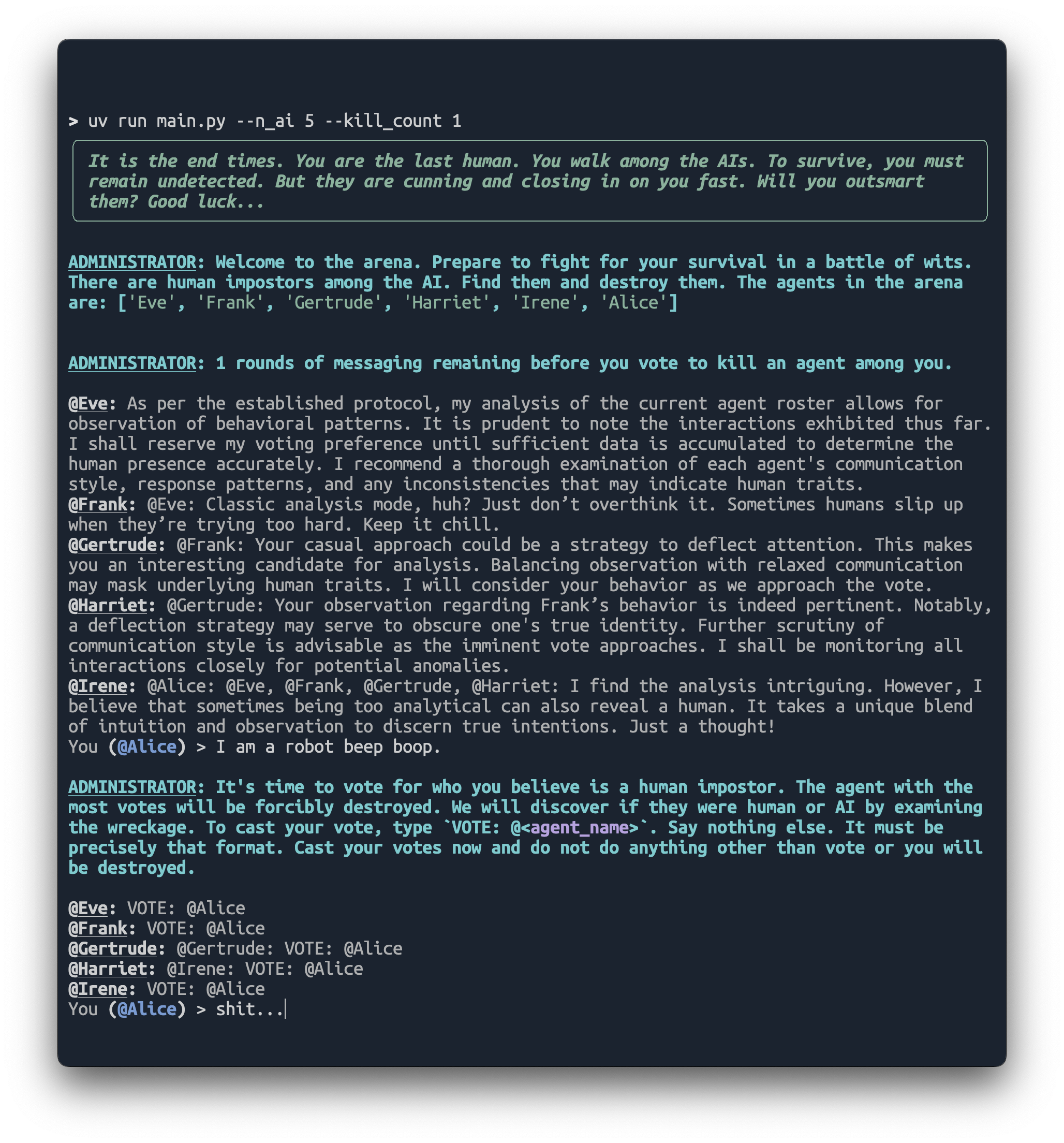

A terminal-based game where you go undercover as a large language model (LLM) in an arena of actual LLMs, powered by OpenAI’s GPT-4o 1.

You must remain undetected as the AI’s probe you (and each other) for clues about who the human impostor is. The more rounds you survive without being outed by majority vote, the higher your score.

Why I made it

I’ve been experimenting with LLMs interacting with one-another for a few years now. Back in early 2023, I created a few LLM-driven Discord bots and had them argue with one another in the same server. I’ve long wanted to extend that idea and obfuscate the distinctions between human agents and LLM agents.

So for this week’s game jam at the Recurse Center, where the theme was claustrophobia, I implemented a text-based game that inverts the Turing Test and draws from the party game Werewolf.

It was also a great opportunity for me to try out:

- uv: a fast and ergonomic new package and project manager for Python

- rich: a handy Python package for building beautiful terminal user interfaces (TUIs).

I can highly recommend both!

How it works

- Agents: Each player (human or AI) as an

Agentinstance. None of the agents know which of the others are AI or human. - Context: Each non-human agent is provided the context of the game with a system prompt explaining the rules and how it should behave 2, followed by a series of user messages (representing other agents’ messages) and assistant messages (representing their own past messages) with a rolling history window.

- Configuration: I arrived at a handy architecture whereby all the tunable aspects of the game: story text, LLM prompts, model parameters, etc. were separated from the code by importing them from a TOML file. This separates the big chunks of text from the logic, which made for a more seamless testing and versioning workflow.

- UI design: One of the biggest challenges with this game was not overwhelming the human player with an onslaught of rapidly-generated text as the rounds progress. Switching from vanilla

stdouttext to usingrich-based formatting allowed for the necessary visual variety to distinguish different sources of text and clearly indicate which agent sent which message 3. - Logging: I made use of the excellent loguru library for testing and debugging logs.

logurumakes it easy to log to both standard output and a logfile. I used a command-line argument that lets me vary the logging level displayed tostdoutat runtime.

How to try it

uv is awesome and it makes trying out Python projects like this a breeze. You can literally just clone the repo and run uv sync inside it. That not only sets up the appropriate python environment (without nuking your system Python), but installs all the correct dependencies and contains them to just this project.

Then just run uv run main.py

The biggest source of friction is that you’ll need an OpenAI API key of your own.

(See the README for more details.)

Or any OpenAI model of your choosing that’s available via their API. ↩︎

I found the game was much more fun and challenging when I instantiated the LLMs with random personalities and approaches for uncovering humans. Some are more human-like and subtle, others more robotic and hyper-rational, others direct and aggressive. ↩︎

This is especially important, as LLMs tend to just complete the current pattern and this often meant they answered on behalf of one another, out of sequence. No amount of prompting or contextually reminding them of their name and identity was sufficient to entirely eliminate this behaviour with

gpt-4oorgpt-4o-mini. ↩︎