See the open source code on Github, and the DevPost page from the hackathon.

UPDATE: I think the most interesting part of this project is how quickly the impressive parts of our work became redundant.

We spent hours configuring and spinning up (expensive) GPU-accelerated EC2 instances. We worked late into the night building APIs and interfaces to adapt the LLaVA model to our use case and integrate it with Claude. This is what was required to implement a vision+text multimodal LLM in early November 2023. We were one of only a couple teams (of around 100) at the hackathon to use multimodal input.

The very next week, OpenAI made GPT-Vision widely available via a simple and cheap API. The following week, we built a way cooler multimodal LLM application for a fraction of the price and effort.

The rate at which the frontier of accessible GenAI is moving is astounding. Yesterday’s cutting edge is today’s table stakes

The project

For Anthropic’s AI hackathon in London, I teamed up with my good friend Tom Tumiel to build something cool with multimodal LLMs in under 24 hours.

Our vision for the next era of AI is one in which everyone has limitless access to professional help and guidance — especially in healthcare.

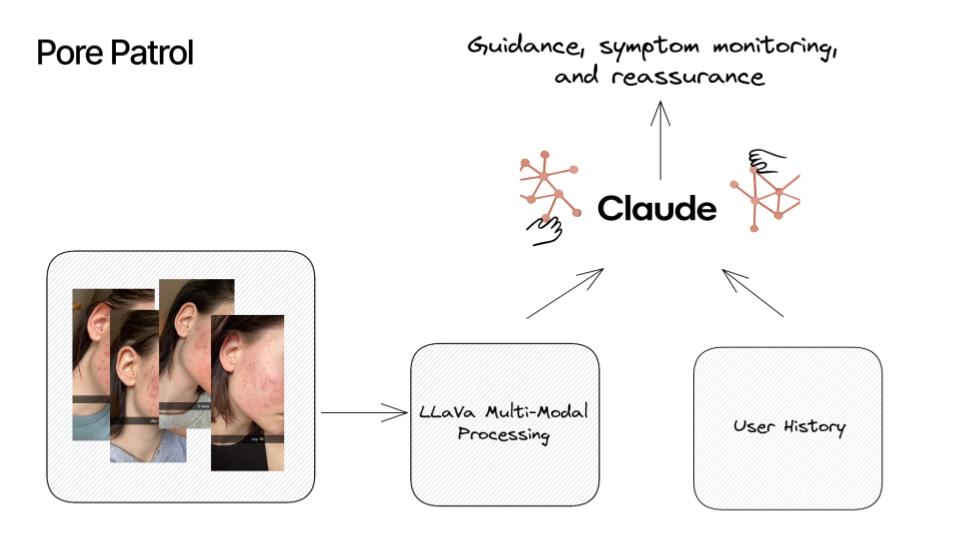

So we built a multimodal GenAI product for therapeutic dermatology to illustrate both the potential and versatility of this technology.

What it does

Health anxiety causes major distress and costs the NHS 400m annually (and who’s more anxious than pimply teens?). We made an AI health assistant that mitigates anxiety about skin conditions like acne by providing personalised guidance, ongoing symptom monitoring, and ever-available reassurance.

How we built it

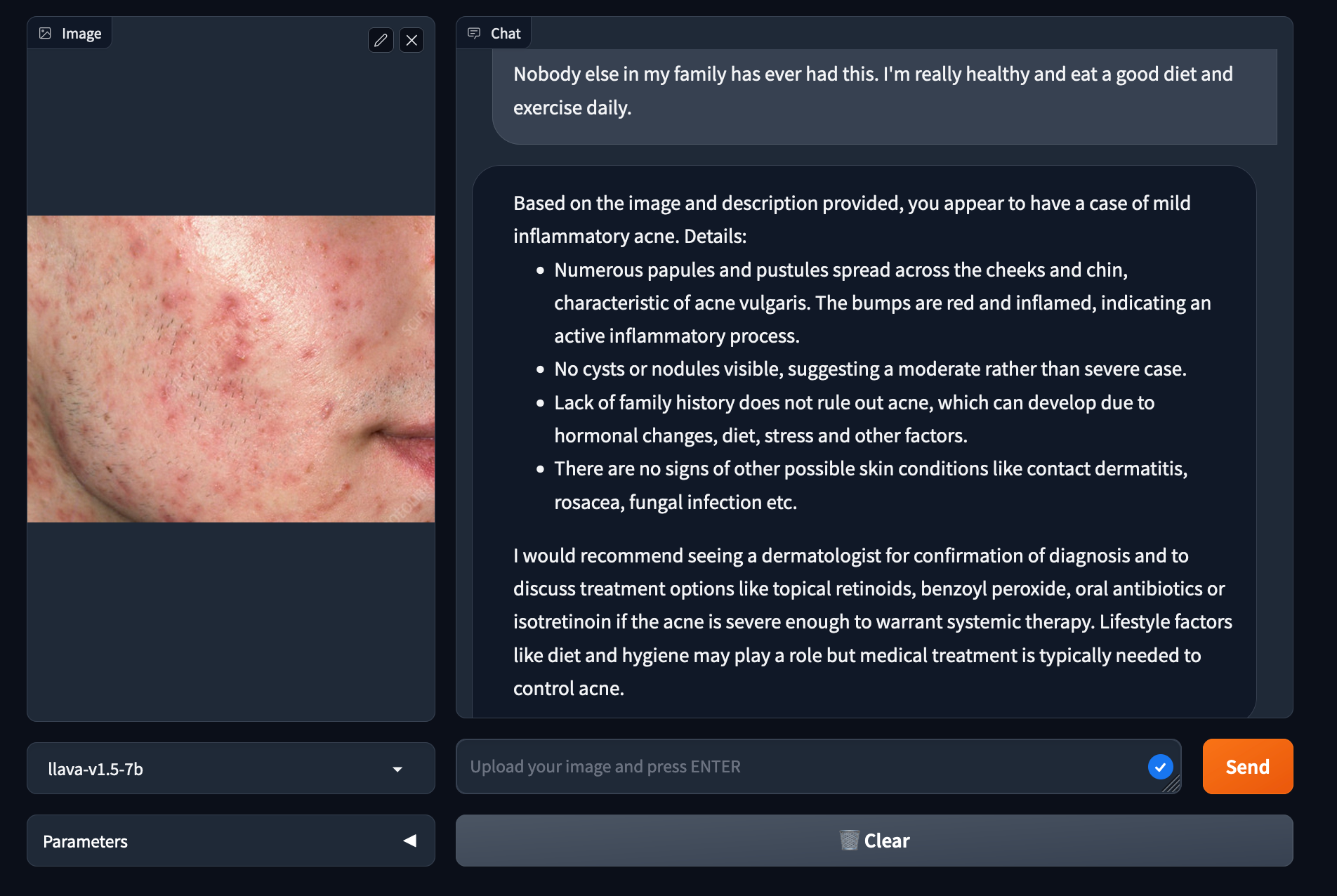

- We spun up a custom instance of the recently released multimodal LLaVA model and prompt-engineered it to medically interpret user images. With 4-bit quantisation, LLaVA can be run on-device for further image privacy.

- Anthropic’s Claude LLM then integrates image descriptions with user history, harnessing its extended context and broad knowledge for tailored therapeutic suggestions.

- Users can then converse with our agent, discussing their concerns and receiving compassionate, but well informed guidance.