DALL.E, meet Salvador

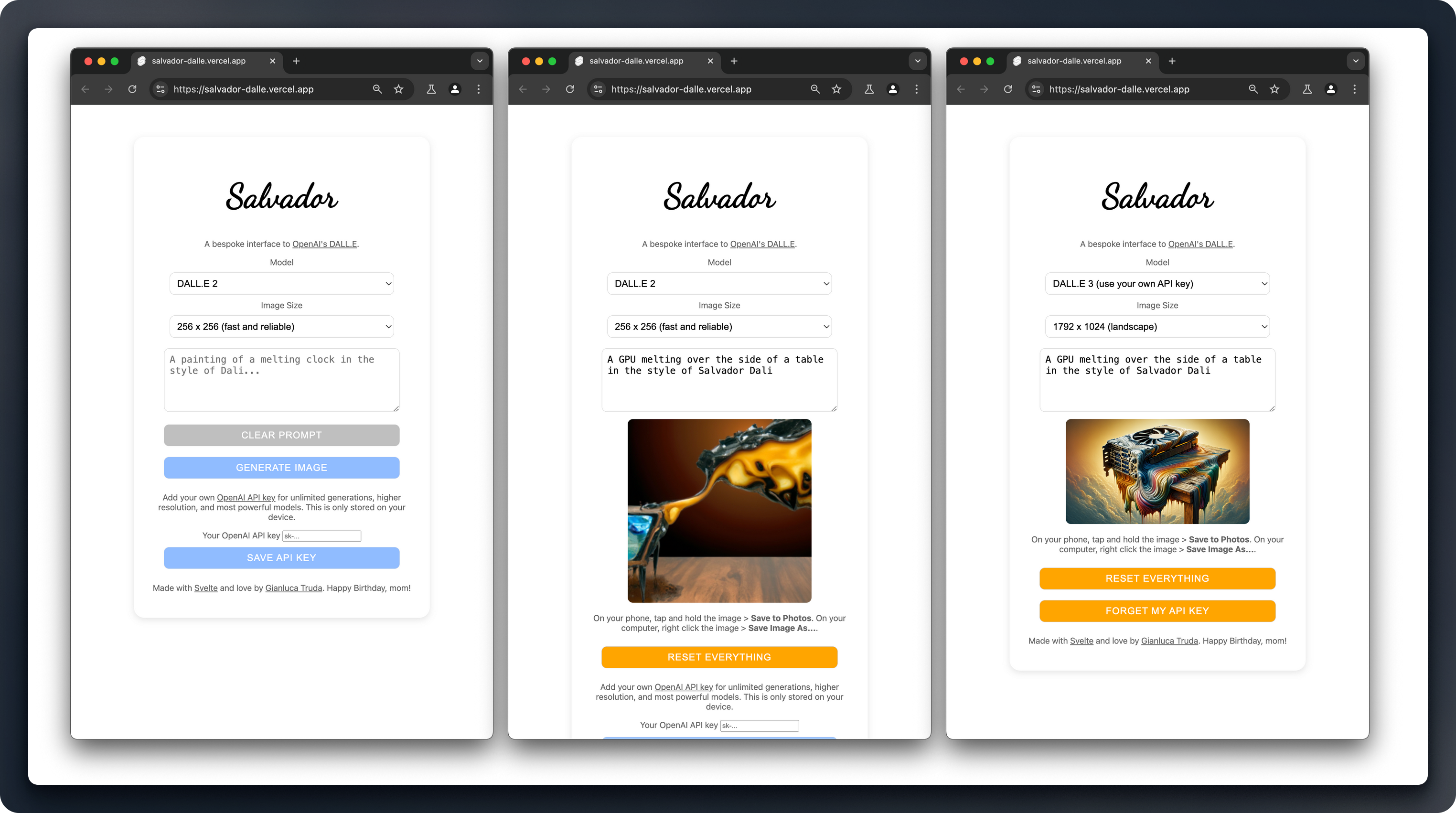

Introducing Salvador: a clean, mobile-first interface for OpenAI’s DALL-E image generator, built with Svelte. Generate visual inspiration with AI using simple prompts and either DALL-E 2 or DALL-E 3.

- Try it at salvador-dalle.vercel.app

- Find the code and guide to deploy your own at github.com/gianlucatruda/salvador

Look mom, no React

This is one from mid-2024 that I’ve recently polished up and released.

I’ve built many things in pure JavaScript, which is great until you need to do dynamic DOM updates combined with web requests. I’ve built a few things with React, which handles those DOM updates at the cost of defacing your codebase to solve problems only relevant to Facebook-scale platforms.

I had never built anything with Svelte, but had heard that it’s lovely and was looking for an excuse to try it:

- Unlike React, Angular, and Vue (which ship a JavaScript runtime to the browser and use a virtual DOM), Svelte actually compiles declarative code (that’s nice to write) into imperative code (that runs fast and lean with native browser APIs).

.sveltefiles are written in JS or TS. Svelte compiles them to vanilla JS, which is handy for backwards compatibility.- Svelte kinda just looks like HTML, but can directly reference variables with a template-like syntax.

My mom is an outstanding visual artist and was curious about using AI-generated imagery as part of her ideation process, but most user interfaces to these models are either generic and overpriced or overcomplicated and technical. I mean, I love the idea that Midjourney only operates out of a Discord server, but that’s kept me from using it much and renders it downright non-existent for many people. And ComfyUI is clearly cracked, but is not for running on an iPhone.

Since I have a bunch of OpenAI credits from hackathon winnings, I decided to gift my mom bespoke software: a clean, mobile-first web app that makes quick iteration and inspiration easy. The result is Salvador and I’m triply delighted with it – I like how it turned out, I’ve really enjoyed using Svelte, and my mom has actually found it useful.

There was just one small problem…

The interesting challenge

The core functionality is straightforward. The user enters the image prompt into a text box, picks some options from form elements, and hits a button. That triggers a function that bundles the inputs into the body of an HTTP POST request to OpenAI’s image generation API. The response is a URL for where the resulting image is hosted, which is injected into an <img> element on the page.

Off to a svelte start

Even adding the constraint of dynamic dropdown menus (which only allow valid combinations of model and resolution) and UX niceties (like loading spinners and pretty CSS) this was only about 100 lines of highly-readable Svelte. I was able to both learn Svelte and whip this up in a single afternoon.

<script lang="ts">

import { onMount } from "svelte";

// Define our variables

let btnText = "Generate Image";

let promptText = "";

let modelName = "dall-e-2";

let imgSize = "256x256";

let uiDisabled = false;

let imgURL = "";

let lastPrompt: string | null;

// Load the previous prompt from browser localStorage

onMount(() => {

if (localStorage.getItem("lastPrompt")) {

lastPrompt = localStorage.getItem("lastPrompt");

}

});

const loadPreviousPrompt = () => {

if (!lastPrompt) {

console.error("No last prompt specified.");

}

promptText = lastPrompt;

};

const makeImage = () => {

const query = `${modelName} (${imgSize}): "${promptText}"`;

console.log("Query:", query);

uiDisabled = true;

btnText = "Generating...";

makeRequest();

};

async function makeRequest() {

localStorage.setItem("lastPrompt", promptText);

const bodyData = {

model: modelName,

prompt: promptText,

n: 1,

size: imgSize,

};

const response = await fetch("https://api.openai.com/v1/images/generations", {

method: "POST",

headers: {

"Content-Type": "application/json",

},

body: JSON.stringify(bodyData),

});

// Truncated: Error handling

imgURL = result.data[0].url;

}

</script>

<main>

<!-- Truncated boilerplate -->

<div class="modelParams">

<form>

<!-- Simple form for model selection -->

</form>

{#if modelName == "dall-e-2"}

<form>

<!-- Only show the options for DALL-E 2 -->

</form>

{:else if modelName == "dall-e-3"}

<form>

<!-- Only show the options for DALL-E 3 -->

</form>

{/if}

<textarea

id="prompt"

placeholder="A painting of a melting clock in the style of Dali..."

bind:value={promptText}

disabled={uiDisabled}

></textarea>

</div>

{#if lastPrompt && !promptText}

<button

class="loadPrevButton"

on:click={loadPreviousPrompt}

disabled={promptText == lastPrompt}>Load previous prompt</button

>

{:else if !imgURL}

<button

class="clearPromptButton"

on:click={() => {

promptText = "";

}}

disabled={!promptText || uiDisabled}>Clear prompt</button

>

{/if}

{#if !imgURL}

<button on:click={makeImage} disabled={uiDisabled || !promptText}

>{btnText}</button

>

{/if}

<div class="results">

{#if imgURL}

<img src={imgURL} alt="Generated AI artwork" />

{:else if uiDisabled}

<div class="spinner"></div>

{/if}

</div>

<!-- Truncated -->

</main>

<style>

/* CSS Styling here. Simple enough for a one-pager. */

</style>

What’s the catch?

With only client-side code, the request is made on the client device. Normally, that’s no problem. But these requests to the OpenAI API reveal my secret API key to anyone who visits the web app. Even with user authentication, this would be very bad practice.

And therein lies the rub. Making beautiful bespoke software for my mom is one thing. Making it safe for publicly accessibility is another.

Don’t forget your own backend

I wracked my brain (and GPT-4’s GPUs) with the problem for quite some time, but there’s simply no way I know of to solve this on client-side code. Even with compiled binaries, API keys can probably still be extracted due to the string encoding.

So I needed to route it via my own server. In the past, I’ve used AWS EC2 instances or headless Replit servers to serve up a quick API for these scenarios, but Svelte comes with tooling to solve problems like this: SvelteKit.

SvelteKit allows you to implement server-side rendering (SSR), routing, code-splitting, etc. There’s full support for writing server-side code bundled with Svelte, so why not try it?

Whereas Svelte is a component framework, SvelteKit is an app framework (or ‘metaframework’, depending on who you ask) that solves the tricky problems of building something production-ready – Svelte docs

It took me another couple hours to figure out the specifics and the syntax, but effectively I could just route the request from the client via my server-side code, attach my OpenAI API key (safely loaded from an environment variable), and forward it on to OpenAI’s API. Then route the response back accordingly.

I was delighted by how simple this turned out to be with SvelteKit (once I had read enough of the docs to understand it) and it all worked pretty seamlessly with my Vite build. Excellent.

import { OPENAI_API_KEY } from "$env/static/private";

import { json } from "@sveltejs/kit";

import { error } from "@sveltejs/kit";

/** @type {import('./$types').RequestHandler} */

export async function POST({ request }) {

const url = "https://api.openai.com/v1/images/generations";

const bodyData = await request.json(); // Convert stream to JSON

console.log("Ready to make OpenAI request", { bodyData });

const response = await fetch(url, {

method: "POST",

headers: {

"Content-Type": "application/json",

Authorization: `Bearer ${OPENAI_API_KEY}`,

},

body: JSON.stringify(bodyData),

});

const result = await response.json();

if (result.error) {

console.error(result.error);

}

return json(result);

}

(And then update the request URL to /api/generate in the client-side code)

It even deployed to Vercel without any additional fiddling and worked in production too – with no leaked API keys.

I delightedly shared the public URL with my mom. But when I was making her a demo video, I noticed that when I did larger-resolution DALL-E 2 generations or any DALL-E 3 generations, the app would hang and the request would never resolve.

Your serverless server serves less

It wasn’t an issue with my code or the OpenAI API. I wasn’t getting any response back so there was no failure message. The client-side was simply being ghosted. I checked my OpenAI dashboard and saw no issues or rate limits and the code still worked perfectly when run locally.

After checking my free-tier Vercel logs and puzzling it over, I discovered that Vercel deploys the SvelteKit server as a “serverless” function. So when the client was making a request to the server-side API, it was having to spin-up that code somewhere, run it, forward the request to OpenAI, and return the response to the client.

But Vercel’s free tier times these serverless workloads out after 10 seconds. That meant that it often was able to complete the end-to-end request for smaller workloads, but was frequently getting cut-off waiting for the OpenAI API to respond with a high-resolution result, which was leaving my client-side request orphaned to the void.

Annoying! But I am not dumb enough to ever give Vercel my payment information. And frankly, I actually find DALL-E 2 images much more artistically interesting and unusual than the generic, overly-common slop that DALL-E 3 now gives. To my relief, my mom actually found it perfect for her use case.

Just write more code

I left the project in stealth and it ran fine for many months while I was at the Recurse Center, building other things. But recently I came back to it and, with some help from Claude, whipped up a quick modification to the main Svelte app which allows users to add their own OpenAI API key (stored in the browser localStorage, just like the previous prompt). If this is present then the request is routed directly to the OpenAI API and bypassing my time-limited SvelteKit router.

onMount(() => {

if (localStorage.getItem("lastPrompt")) {

lastPrompt = localStorage.getItem("lastPrompt");

}

if (localStorage.getItem("apiKey")) {

apiKey = localStorage.getItem("apiKey") || "";

}

});

async function makeRequest() {

// ... Setting body data

let requestURL = "/api/generate";

if (apiKey != null && apiKey != "") {

requestURL = "https://api.openai.com/v1/images/generations";

}

const response = await fetch(requestURL, {

method: "POST",

headers: {

"Content-Type": "application/json",

"Authorization": `Bearer ${apiKey}`,

},

body: JSON.stringify(bodyData),

});

// ... Error handling

}

Again, the syntax and compilation of Svelte made the UI changes delightfully easy to implement. To me, it just feels much more intuitive and … well… svelte than the JSX of React. Give it a try sometime.

Find the code and guide to deploy your own at github.com/gianlucatruda/salvador

Thanks for checking this out! If you’re interested in my writing, projects, or working with me then please do reach out on X, Bluesky, or by email.

If you think other people might enjoy reading this or any of my other posts, shares are always appreciated.